NOTE

This post was originally published on Skyscanner Marketing Medium account. You can find that post here

It started with pavements

I read that when the University of California were building their campus in Berkeley, they couldn’t decide on where to pave the long stretches of grass. So instead, they didn’t. For the first year, students were trampling all over it like nobody’s business.

A year later, they revisited the issue of paths, and paved over the most deeply trampled lines — this was where most students were wanting to walk, after all. The university decided to not try to second-guess the students and instead used their behavior to dictate where they built the pavements to be as useful as possible.

How does this relate to an email?

In the Traveller Communication Squad, it’s our job to build systems that create, send and report on communications — such as Price Alerts, the automated Newsletter, Car Hire Reminders — via the most appropriate channel (emails, push notification, Google Now cards or SMS).

Our mission statement — “… to send relevant, personalised and consistent messages to our travellers” — explicitly mentions consistency. As part of achieving this, we’ve been working to redesign the emails we send to users to make them more consistent and to match the Skyscanner design guidelines.

All of the emails we send are thoroughly tracked. We know when a user has received an email, opened it, clicked on it, or reported it as spam. We’ve been using some of these metrics to determine the success of our redesigns — in particular, the click-through rate (‘CTR’, the percentage of recipients who clicked on a link in the email), and if we’ve change the subject line of pre-header, the open rate (‘OR’, the percentage of recipients who opened the email).

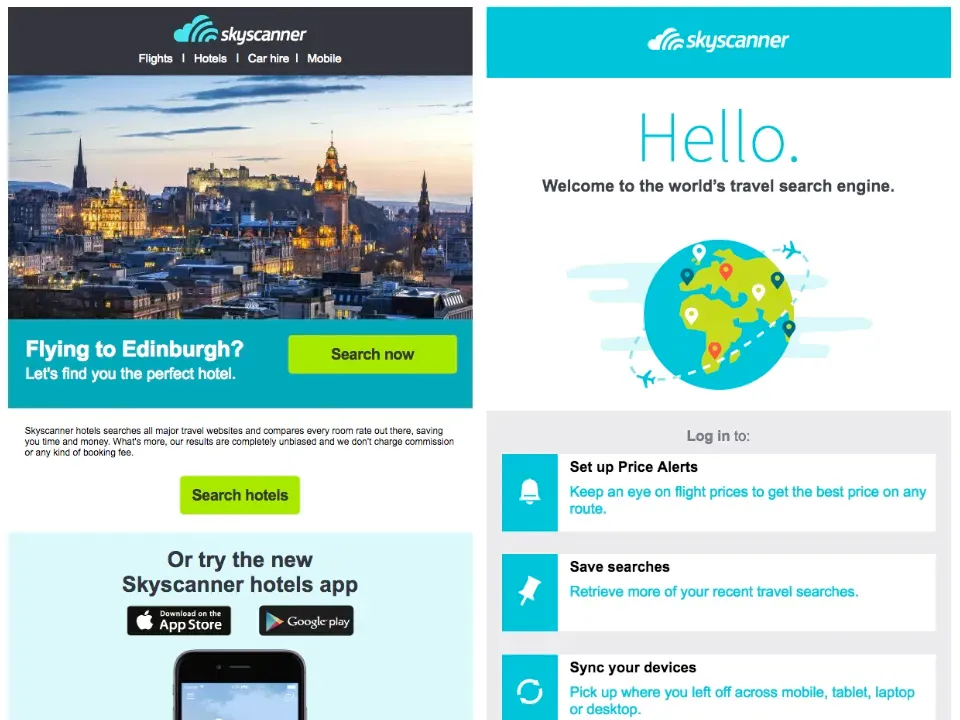

Naturally, different email products have been created at different times and as such, many of them have different styles. For example, below you can see the Hotels Cross Sell email alongside our Welcome email. Since the Hotels Cross Sell email was created outside of the squad, it’s one of the more drastically different designs. It also performs well relative to other emails, making it an interesting candidate for a careful redesign.

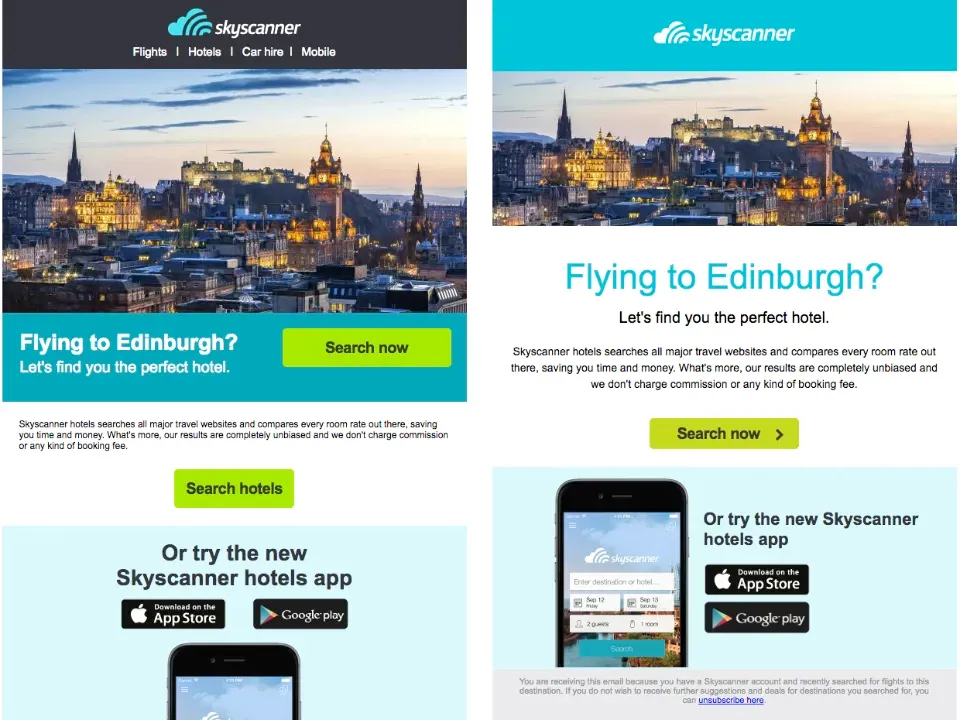

Redesign

We created a draft design for what the Hotels Cross Sell email (and our other comms) could look like, taking into consideration the technical limitations of email design and the current design guidelines. As a squad, we all much preferred this design and it was released as a B variant in an A/B test. For the next 3 weeks there was a 50% chance that recipients in UK and Ireland would receive the new design.

The results were surprising

After about a week, we were eager to have a peek at the results. We checked our reporting platform. To our dismay, the original design was coming out ahead, by quite a margin. We left it another couple of weeks to ensure we gained enough results for statistical significance. By this time, the result was clear cut — the redesign performed worse than the original design.

What was quite interesting was our attitude to the first glance at the results. The initial reaction was that something was wrong with the A/B test. We waited for it to ‘correct’ itself. The tracking on the emails was checked. Logs where checked for errors that might offer some insights. It took us a while to accept that the new design was enticing less users to click on it that the original design.

Looking back now, this seems pretty naive and close-minded, but at the time this seemed like the most likely reason. Our previous redesigns had all positively affected the click-through rate so we expected the same with this test.

How does this relate to University Of California?

We started with the idea of redesigning this email, and created new designs to fit with branding. For this email type, this didn’t work, as users clearly prefer the old design. In retrospect, it’s clear to see that we were blinded by our own assumptions of what a user will prefer.

From here, we need to take a leaf out of UC Berkeley’s book and look at user behaviour — why did they prefer the old email and how can we build them a new email that they still want to use just as much, if not more, but that fits our prerogative too (consistent design). We’re planning on being much more open-minded for results like these, embracing ‘Design Like We’re Right, Test Like We’re Wrong’ ethos.